In the past few months, it’s surprising the number of times I’ve had to play a video in Flash (in either FLV or F4V format) and know its current time (or current frame) accurately – specially because we’ve been using a lot of shape tracking, to project a Flash content-based plane on top of a video being played (with proper perspective distortion).

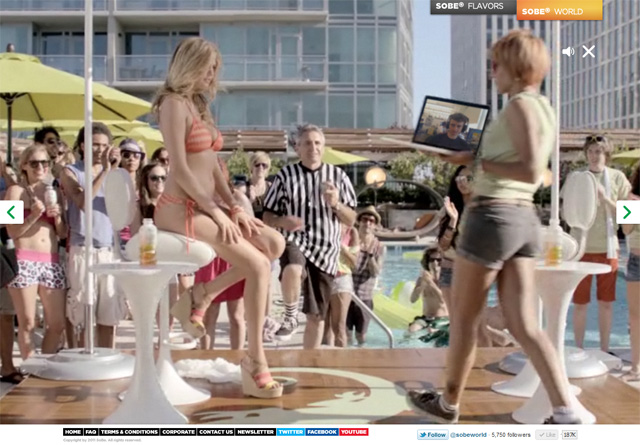

Examples of this are in out work for SoBe’s Staring Contest (try the “experimental version”), also SoBe’s Drop of Flavor, and in 5Gum’s 5React this one created more than a year ago (select “United States”, click “Find out”, and approve the Facebook requests – don’t worry, it’s not gonna post aything to your wall, the campaign is over; and you may need to reload the page a few times after approval as recent changes in Facebook login have broken the website in some browsers).

It’s developing websites like these that made me realize how hard it is to know well what’s the video’s current position in time (and no, saving the video inside a SWF container and then reading the MovieClip’s currentFrame is not a real solution). One would expect using the NetStream’s time property to be the way to go, but this is extremely inaccurate; I have the feeling this is a property that is only updated after video keyframes are reached. And, for the kind of tracking we need to do, being one frame off in our calculations was already too much.

For 5React, I had to know the video’s current frame, so I could write the content to the fake TV sets that show during the introductory video playback. After much testing and researching, I ended up using the number of NetStream’s decodedFrames (surprisingly absent from the documentation) added to the number of droppedFrames to properly determine the video’s current frame.

This approach has two big caveats, however. First, they are properties that accumulate as the video is played – they don’t take time into consideration. This means that if you stop the video after one second and start playing again from the beginning, the number of decodedFrames will never reset – it’ll just continue to accumulate. So it means it’s only useful on a linear, continuous playback – if the user can’t pause video execution and seek to a different time.

The second caveat is that the properties are not so reliable. In theory, droppedFrames sound amazing, but apparently it’s only increased when Flash decides top drop a frame itself – if the Flash Player hangs for a fraction of a second (say, when you right-click the Flash movie), it has no impact in that number, meaning the sync is lost. The same happens if you switch back from the window playing the SWF – neither decodedFrames or droppedFrames are counted. This behavior is easy to notice with the 5React animation – if you just watch the whole thing, it’ll perform flawlessly. If you’re on a slow computer, or if you right-click the SWF prior to execution, or if you switch to another browser tab or window and then switch back, tracking is already out of sync.

Because of these issues, when implementing the tracking solution for SoBe’s Staring Contest, I knew I couldn’t rely on that technique – I needed to allow the user to scrub the video and see the tracking matching the video time – so I tried something different: encoding cuepoints in the video with the number of the current frame.

The problem with this approach is, however, threefold. For one thing, encoding the cuepoints with the necessary information into the video is a daunting task – one that can be helped by scripts, but still, a hard task.

The second problem is that using real cuepoints force you to use the “old” On2 VP6 video codec – instead of the new, more modern, H.264 codec-based F4V format. The videos for the above website had to be HD-quality videos, and being forced to use FLV for those meant bigger files, less quality, and even worse rendering performance.

The third and final problem is that even thought FLV cuepoints are extremely accurate, they’re still not accurate enough. Apparently, video frames can be decoded and rendered at a separate thread than the cuepoints for that specific frame are triggered, so we’ve ran into situations where the tracked plane is not rendered properly (and, like I said, even a 1 frame offset is already too much unless the video being tracked is very slow). This is a problem that is hard to put a finger on, and it depends largely on Flash Player version, system version, and number of processors available on a machine – but it suffices to say that, while the cuepoint decoding and rendering works pretty well on most machines, it does fail pretty badly on a top-of-the-line Mac machine with the latest version of the Flash Player for no apparent reason. This is noticeable on the Drop of Flavor website I mentioned above, or on the Staring Contest, when playing the “victory” video for the experimental version and scrubbing through the video during the part where the model shows you the smartphone with your photo: scrubbing too much, too quickly, will cause the tracked shape to be rendered somewhere it doesn’t belong.

I’ve been discussing this with Eric Decker – who developed the Drop of Flavor website – and he believes he has come up with a somewhat better solution, product of a crazy idea we’ve discussed in the past. Can you guess what it is?

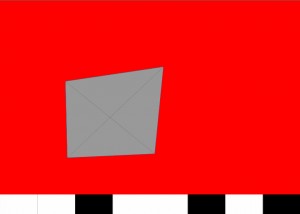

He’s encoding the current frame’s number as binary, at the bottom of the video, in the shape of rectangles that go on and off. He then reads the color information (via getPixel) and converts that to the proper number. It works well, and it’s very accurate – but it requires encoding the video with this information (created with a Photoshop action that creates a layer for every number) that not only takes space and file size, but forces you to take the useless space into account when rendering the video.

He has more information about that here. That we even need to resort to this kind of hack is something that has been driving me crazy, so I’d just like to put something out there:

We need to know the current frame – or accurate time position – of a video being played in Flash, regardless of the format, without any crazy hack.

I don’t know the particularities of how the video decoding and renderers are programmed in the Flash Player. However, it does seems to be to be a little bit odd that we can’t have a property like “currentFrame” that indicates the actual number of the frame rendered there in the screen, or a “time” that is updated on every frame.

I like to believe I’m not the kind of developer that likes to bitch and moan about features. And I’ve already seen Adobe constantly delivering features I wanted in the Flash Player, like better sound control and, more recently, native JSON parsing. But, seriously… think about this: we just need to know the video’s current frame or time.

Have you ever ran into this issue? Am I the only one who wants this functionality to be that accurate? Or is it something a lot of people have ran into? Make your voice, and opinion, be heard – I’ve created a feature request entry on Adobe’s bug tracker for Flash Player (requires login).

Love the solution – you know – that could be automated with Adobe ExtendScript (which I have used for batching actions / processes in AfterEffects before).

An accurate totalFrames (as well as currentFrame) property would be very handy. Also, since we are on the topic, being able to capture frames/BitmapData from StageVideo would be quite nice.

As you said; having FLV in an SWF is not really a solution, but that’s what I am using for current project – and well – video is not HD, and quality could be better. Then, there are audio sync issues.

Thank you for this post and solution. I banged my head against this over a year ago working on a Dragon’s Lair-esque prototype. The end solution of approximating time with cue points was painful.

It’s an interesting hack, but until Adobe provides a way to get the current frame this does look like the best kluge. Another thank you for registering this in Jira; you have my vote.

Cheers.

Zeh, how do you access the experimental version of the staring contest?

@Tomek: after you’re past the intro video for the game, there’s a big button to start the game (that button starts the mouse-based game). If you have a webcam, a button for the “experimental” version will show up on the bottom right of that screen. That one starts the webcam-based game.

Hi,

The solution is just perfect !

It reminds me a guy who was encoding 3D coords in the texture of the model, but it’s an other story… (http://en.nicoptere.net/?p=1017)

I read Eric’s post and he wrote that you had an After Effect expression that generates the barcode… if you could share this it would be amazing 🙂

thanks

Sorry andrzej, unfortunately I don’t have it anymore. I remember building it as a test but I honestly can’t find it anymore, so I’m afraid I may have deleted it.

It was just a bunch of solid squares, where the square would be white and their background, black. Then each square had an after effects expression (based on the frame number) that controlled their opacity, depending on the the “digit”. That way, for the first “digit”, opacity was (frame % 2 > 0 ? 100 : 0); for the second “digit”, opacity was (frame % 4 > 0 ? 100 : 0); and so on and so forth. Something like that. Shouldn’t be hard to redo, but I don’t know expressions that well to just go and redo it again quickly.

Hello Zeh,

No problem i have made a jpg suit with an air project (i’m a flash dev) then i’ve made a barcode video, i can now embed in After Effect and Final Cut, etc..

Everything works : i’m detecting the right frame, but i still have some sync problems.. visible only when the video is playing, when it’s paused the sync is perfect.. don’t know why it happens. My real framerate is 60 ! so it’s not a performance issue…

Could you help me with this? maybe i could send you a link with a test and with the source?

Well, it can be either of two things:

1. a problem with your code not detecting the proper frame

2. a problem with loss of sync

For (2), I think videos are decoded and rendered at a different time than the actual frame renders… meaning a normal ENTER_FRAME event might come up too late and not detect the proper frame. If that’s the case, I’m not sure much can be done, unless it’s a fixed video frame offset – then you could just apply it all the time when reading the current frame.

If it’s (1), though, it’s a matter of finding the bug.

Hello, Zeh!

I read your post a while ago while searching for solutions for sync issues. I didn’t find any code from Google, so I created AE and AS3 scripts. You can check ’em here: http://nikohelle.net/2011/11/25/as3-perfect-video-sync-with-embedded-frame-numbers/

Thank you for posting this. I’m just experiencing the same problem and this article will help me explaining what’s happening. keep on sharing ! 😉